Brain Science Provides New Approach to Patient Safety Training

November/December 2013

Brain Science Provides New Approach to Patient Safety Training

No matter how rigorous and well-formulated patient safety training may be, attendees cannot escape the normal physiological process of forgetting that can wipe out much of what they learn even within 30 days. This “forgetting curve” is a daunting consideration for health quality leaders who must assure their staff members continually meet Joint Commission and other healthcare quality standards long after a training course is completed. In order to fight the forgetting curve, we developed a novel, evidence-based methodology called spaced education (SE), which combines game mechanics with two core findings in brain science: spacing and testing effects. In more than 16 large randomized trials, we have shown that SE can improve long-term retention of learning, boost learner engagement, and improve patient care. To our surprise, participants really like SE programs! Unlike our trials with traditional web-based teaching modules in the early 2000s, we did not have to take extraordinary measures to get participants to complete the program. SE’s intermittent challenges grabbed participants’ attention and took very little of their time.

This article describes several successful applications of SE to improve patient safety and infection control. These include one led by Dr. Tim Shaw of University of Sydney, who studied this new approach with 370 new physicians at Harvard’s teaching hospitals, and another among 260 nurses at the Veterans Affairs Boston Healthcare System. Both demonstrate the efficacy of SE and its strong potential for nursing, residency programs, and other professional health care training.

Spaced Education: A Novel Yet Simple Solution

Spaced education is an evidence-based methodology based on psychology research findings of the spacing effect and the testing effect. The spacing effect refers to the finding that educational encounters that are spaced and repeated over time (spaced distribution) result in more efficient learning and improved retention, compared to mass distribution at a single time-point (Glenberg & Lehmann, 1980; Toppino et al., 1991).

SE is structured as a series of detailed questions and explanations delivered via computer or mobile device over spaced intervals of time. Learners receive an email containing a clinical scenario and a multiple-choice question. Upon submitting their answers, participants are immediately presented with the correct answer and learning points germane to the question. The questions are then re-presented over spaced intervals in a series of cycled-reviews (interval reinforcements) to take advantage of the power of the spacing effect.

By using a testing format during the SE encounters, the methodology also takes advantage of the testing effect, the psychological finding that testing of learned material does not serve merely to evaluate a learner’s performance. Rather, testing actually alters the learning process to significantly improve knowledge retention (Gates, 1917; Spitzer, 1939). Such an impact of the testing effect was demonstrated in a study of 120 college students (Roediger & Karpicke, 2006) Students who studied a prose passage for 7 minutes and were immediately tested on the passage over a 7-minute period had significantly better retention of the material 1 week later, compared to students who spent 14 minutes studying the prose in the absence of testing.

Applying Spaced Education to Patient Safety and Quality Improvement Programs

Can SE improve patient safety behaviors among incoming interns at the Massachusetts General Hospital and the Brigham & Women’s Hospital? This was the question posed by Dr. Tim Shaw from the University of Sydney, who was first introduced to the SE methodology in 2009 during his year-long visiting professorship at Harvard University. Dr. Shaw had managed the development of the Australian National Patient Safety Education Framework and worked on the WHO Patient Safety Curriculum Framework for Medical Schools and the Patient Safety Education Project (PSEP) in the United States. Through this, he appreciated the need to move beyond “death by PowerPoint” and find simple yet effective methods to improve patient safety training.

To determine whether SE can improve the patient safety behaviors, Dr. Shaw and Dr. Tejal Gandhi, chief quality and safety officer at Partners HealthCare at the time of the study, who is currently president of the National Patient Safety Foundation, conducted a randomized trial among the 371 incoming physician-trainees. They compared the effectiveness of SE versus their standard approach (an online slide show followed by a quiz) for improving the patient-safety behaviors mandated in The Joint Commission’s National Patient Safety Goals (NPSG). While post-test scores were higher among the SE-trained interns, the most interesting results were generated when the interns were presented with a patient safety scenario in a simulation center. The interns were asked to place a central line on the simulated patient, but the test was really to see if they performed 13 key patient safety behaviors, including hand washing, use of the wristband to identify the patient, etc. Those interns who received SE had substantially better performance on these measures than those who received standard training. In particular, the interns in surgical specialties benefited from the SE methodology. In contrast to standard online education, Drs. Shaw and Gandhi report in BMJ Quality and Safety (2012), “SE was more contextually relevant to trainees and was engaging. SE impacted more significantly on both self-reported confidence and the behavior of surgical residents in a simulated scenario.”

In separate research that I directed, we posed a different question: Can SE improve healthcare quality and clinical behaviors among primary care practitioners? To answer this, we performed a 2-year randomized trial to determine whether SE could improve prostate cancer screening patterns among primary care physicians, nurse practitioners, and physician assistants in eight northeastern Veterans Affairs (VA) hospitals (Kerfoot et al., 2007). Our prior data showed that almost 20% of prostate-specific antigen (PSA) testing was inappropriate based upon the guideline-directed criteria.9

Primary care providers were recruited via email and randomized into two cohorts: cohort 1 received SE over a 36-week period (0-2 emails per week), while cohort 2 (controls) received no intervention and represented the current standard-of-care within the VA system. Each SE question presented a clinical scenario and asked whether or not it was appropriate to perform PSA screening with participants receiving immediate feedback after submission of their answers. An extremely popular component of the program were the clinical scenarios that were designed to be humorous, asking clinicians to perform PSA screening on Snow White’s seven dwarfs, superheroes (including Blade, who is now retired and owns several SuperCut stores), characters from the TV series M*A*S*H*, and movie characters (including Forrest Gump and the groundskeeper from Caddyshack).

Ninety-five primary care providers enrolled in the trial. We then tracked the number of PSA screening tests ordered by participants in the study. Over the 36-week intervention period, SE clinicians ordered fewer inappropriate PSA tests compared to the control group (762 versus 1145, respectively), which in logistic regression models represented a 26% relative reduction in inappropriate screening. We then followed these clinicians’ practice patterns after completing the intervention to assess the durability of the observed effects with the SE program. In the 72-week period after the intervention was completed, SE clinicians continued to order fewer inappropriate PSA tests (1,028 versus 1,906 by control clinicians), representing a durable 40% relative-reduction in inappropriate screening. Acceptability of the SE program was extremely high: 93% requested to participate in future SE programs.

It is rare for an educational intervention to generate demonstrable improvements in clinical behavior, but SE has achieved this important outcome. Of even greater significance is the sustained effectiveness of the program more than 1 year after completing the intervention. Harvard was so impressed with the research results and the novel simplicity of the methodology that their Office of Technology Development submitted a patent application on it.

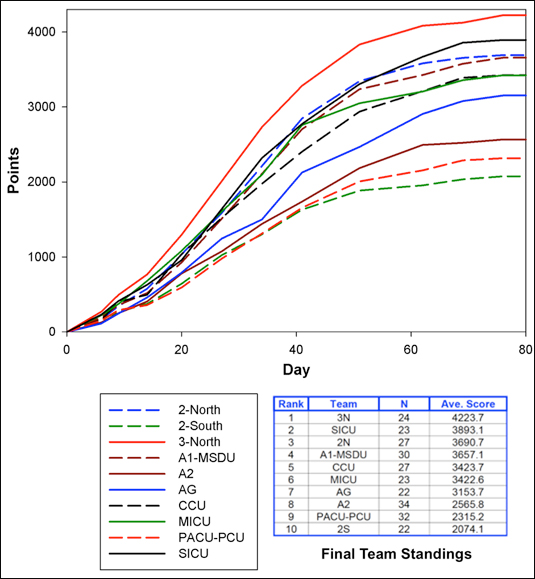

Figure 1: Progression of VA nurses’ team scores over the 3-month program.

Reinforcing Knowledge with Fierce (but Friendly) Competition

A company (called Qstream) that was launched by Harvard to spread the SE methodology across professional education has made many refinements to the SE methodology, including optimizing delivery for mobile devices and introducing several powerful and engaging game mechanics with social communications elements. These game mechanics significantly boost learning efficiency and engagement via adaptive content reinforcement based on performance, competition among participants, competition among teams, humorous leaderboard aliases for participants (Cheeky Raccoon, Nine Inch Nails, etc), and a progression dynamic where progress is displayed and measured by completing itemized task (Salen et al., 2004, Schonfeld, 2010; Kerfoot, 2010).

In a recent initiative, we implemented this “SE game” approach for patient safety training among 264 nurses from 12 inpatient wards at the VA Boston Health System. Twenty-two separate question-explanation items were developed that focused on infection control and patient safety. The pictures for each questions featured actual nurses from all different wards. The game was structured with two questions sent twice a week in order to provide a meaningful volume of education to the nurses while balancing this with their demanding clinical workloads. The system repeated questions in 8 days if answered incorrectly and 16 days if answered correctly. The spacing intervals between repetitions were established based on psychology research findings to optimize long-term retention of learning (Pashler et al., 2007; Cepeda et al., 2008). If a question was answered correctly twice consecutively, it was retired from the SE game (progression dynamic).

The goal of the SE game was to retire all 22 questions. To foster a sense of competition and community, nurses were shown how many others had correctly answered a given question and which answer choices had been selected. The length of the SE game varied based on the rapidity with which each nurse answered and retired the SE questions. Points were awarded to nurses based on how they performed on each question on initial presentation: 100 points for a correct answer, 0 for an incorrect answer, and partial credit for a partially-correct response. In addition, points were awarded when a question was retired, with a penalty for any prior incorrect responses.

Leaderboards of individual performance were posted online on each explanation page and on each SE game email. Nurses were de-identified so as to create a safe environment for them to answer the questions. Their first names were replaced with the name of the ward, and last names were replaced with the name of a cool rock band (CCU Bee Gees, etc.) Teams were designated by clinical ward, and scores were calculated as the average score of its nursing team members. Updated team leaderboards were posted each week to boost engagement among nursing units. The team with the highest average point total at the end of the game was awarded with VA Boston fleece jackets. In addition, all nurses who answered all 22 questions at least once would be exempted from the annual infection control mandatory training.

More than 95% of nurses volunteered to participate in this patient-safety initiative. Frankly, we were amazed at this overwhelming participation on a topic that usually does not generate much excitement. Of the 264 nurses on the 12 teams, 234 (89%) submitted answers to all questions. The program was very popular among the nurses: 89% of respondents on the end-of-program survey “strongly agreed” or “agreed” that “Overall, the SE game was better than the usual VA online training programs” and “Completing the SE online game will enable me to provide better and safer care to our veterans.” In rating their overall SE online game experience, 85% responded positively. Eighty-seven percent stated that they would like to have other VA mandatory training via SE games, and 86% responded that they would participate in a SE game even if no prizes were awarded.

Since March 2013, the SE game has been utilized for Joint Commission Continual Readiness Education at all three VA Boston Health System (VABHS) campuses, with more than 2,100 staff members participating. Aside from nursing, other services include pharmacy, mental health, engineering, environmental management services, nutrition, social work, and others. Our results have led to the spread of the SE methodology across the United States, including hospital-system implementations at Partners HealthCare in Boston and among the University of California Health Quality Improvement Network.

In summary, traditional bolus (AKA “binge-and-purge”) formats for patient safety training are not conducive to the way that people actually store information critical to job performance. In addition, patient safety training often elicits more groans than grins from participants. As demonstrated in the examples above, SE is an evidence-based, engaging, and elegantly simple methodology to boost learner engagement, increase the long-term impact of patient safety training, and improve patient care.

B. Price Kerfoot is an associate professor of surgery at Harvard Medical School. He holds degrees from Harvard (MD & EdM), Oxford (MA) and Princeton (AB). Kerfoot is the recipient of a “Rising Star” Award from the American Urological Association Foundation and was honored in 2011 by President Obama with the Presidential Early Career Award (PECASE) for his work on education technology research. The Presidential Early Career Award is the “highest honor bestowed by the United States government on outstanding scientists and engineers in the early stages of their independent research careers.”

Harvard University has submitted a patent on the spaced education methodology; while Dr. Kerfoot is cited as the inventor on the patent application, Harvard University has full ownership of the intellectual property. Dr. Kerfoot is an equity owner and director of Qstream Inc., an online platform launched by Harvard University to host spaced education outside of its firewalls.

The views expressed in this article are those of the author and do not necessarily reflect the position and policy of the United States Federal Government or the Department of Veterans Affairs. No official endorsement should be inferred.

References