Human Performance Limitations in Medicine: A Cognitive Focus (Part 2)

By Bob Baron, PhD

Editor’s note: Part 2 of 2. Read part 1 here.

Case study revisited

Much of what has been covered to this point has been a cursory look at human factors, human behavior, and cognition. “Cursory” is the operative word here, as volumes have been written for each subject. However, these fundamental tools should provide the reader with enough insight to revisit the case study presented in part 1 and understand human performance limitations as they apply to medicine. This part of the article will concentrate on the five causal factors listed in the root cause analysis. The first three factors will be amalgamated into one category: medication errors. These errors were listed as follows:

1. “Nearly 600 orders of medication labels are manually prepared and sorted daily”

- “Labels are printed in ‘batch’ by floor instead of by drug”

- “The medications have ‘look-alike’ brand names”

Medication errors may be the largest and most insidious problem in the hospital environment. According to U.S. Pharmacopeia (2002), data derived from a 2001 study indicate that distractions (47%), workload increases (24%), and staffing issues (36%) are often contributing factors to medication errors. The report also states that these percentages are fairly uniform among hospitals nationwide. That said, given the chaotic environment of most hospitals, even the most highly skilled medical professionals are apt to have their cognitive abilities challenged. Some of the most pronounced problem areas include the following (in no particular order):

- Right medication administered to the wrong patient

- Wrong medication administered to the right patient

- Improper dosage or wrong administration time

- Difficulty in reading written prescriptions

- Similar-sounding drug names

- Similar-looking drugs (tablets, pills, fluids) and packaging (vial sizes, shapes, )

- Computer input/output errors

- Storage locations

In this case study, with nearly 600 medication labels prepared per day, the atmosphere was rife for potential error. Many drugs have similar-sounding names, and during the labeling process the technician is likely to be multitasking, under time pressure, and subject to multiple interruptions (not to mention a consistently noisy environment). The ideal remedy would be to allow the technician to concentrate on one project at a time, without interruption or being rushed, in a soundproofed room—but since this is an unrealistic scenario, there needs to be an understanding of the forces that affect the practical working environment.

People are able to multitask fairly well when only lower-level attention is required. In other words, we can do multiple things when there is a certain amount of automaticity built in (such as the proverbial walking and chewing gum). However, when faced with multiple higher-level cognitive demands, the saturation of stimuli exceeds our capacity to process effectively, resulting in many processing/decision errors.

One of the most salient examples of this limitation can be found in a pair of activities that most people do on a daily basis: talking on a cell phone while driving an automobile. Separately, these activities are fairly innocuous. Together, they can present a cognitive overload with deadly implications. A meta-analysis of the impact of cell phone conversations on driving showed how mental processing ability is compromised when these two activities are conducted together (Horrey & Wickens, 2004). Of the 16 studies (contributing a total of 37 analysis entries), it was found that there was a clear cost to driving performance when drivers were engaged in cell phone conversations. It was further found that these costs were incurred primarily in reaction time, with smaller costs associated with road tracking performance. Interestingly, the results also showed no significant difference between hand-held and hands-free phone conversations, suggesting that the conversation itself is the distracter and not the method by which it is conducted. The very worst scenario occurs when a person is driving in an unfamiliar area (or through a construction zone) while talking on a cell phone. Combine the driving task (non-automatic because of road unfamiliarity or construction) with an emotional cell phone conversation (cognitive distraction) and the potential danger becomes obvious.

Advances in medication technology can mitigate errors—to a point. Take, for example, the automated drug-dispensing machine known as Pyxis®. These systems combine storage with inventory control software where the request for a drug is entered into the system, resulting in the appropriate drawer being unlocked to allow access to the drug (Medicare Payment Advisory Commission, 2003). Under direct observation by the author, a medical error was observed in real time at one of a large hospital’s Pyxis machines. The machine itself worked flawlessly; instead, the human interaction with the machine caused the error. This case involved a nurse who correctly entered a patient’s information into the computer; the Pyxis unlocked the correct drawer and dispensed the medication. A few minutes later, the nurse came back to the station and proclaimed that she had “almost administered the wrong drug” and was “glad she caught it when she did.” It turned out that the drawer contained an incorrect drug. The nurse had done nothing wrong when operating the Pyxis; rather, the technician who last refilled the machine had placed the wrong drug in the drawer. This error was exacerbated by the fact that the incorrect drug vial was similar in size, shape, and color to the correct vial. The lesson learned here: Modern technology is only as good as the humans who interface with it. The nurse was commended for mitigating a potentially serious medication error.

Another recent technological step has been the implementation of bar coding for human drug and biological products. Similar to the bar coding found on many consumer products, drugs and blood are required to have a bar code label containing critical information such as the drug’s National Drug Code number (Federal Register, 2004). This method will reduce the likelihood that similar sounding drugs, such as Celebrex® and Cerebyx®, will be confused (especially in their raw written form). Similarly, many of the problems with wrong-type blood transfusions will be decreased due to mislabeling. However, the human aspect of error remains. If the physician orders the wrong drug, the bar coding will not prevent a medication error; it will merely verify that the errant drug is exactly what was ordered. Even with technology, one must understand the limitations of the input/output process where humans are still accountable for a multitude of actions.

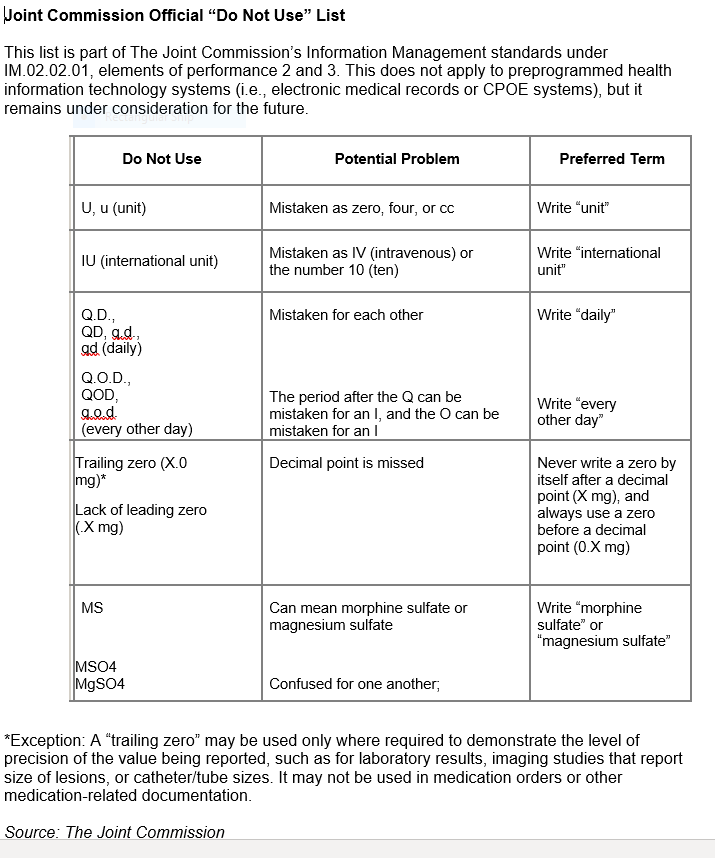

Ambiguous terminology has caused, and continues to cause, numerous medical errors. Specifically, written orders that must be deciphered by nurses and pharmacy technicians are subject to mistakes in interpretation and processing. So bad is the problem that the Joint Commission on Accreditation of Healthcare Organizations (now called The Joint Commission) issued a “prohibited abbreviations” list in 2004 that has been updated over the years (The Joint Commission, 2019). The list includes items that must be included on each accredited organization’s “Do not use” list. The following chart shows the abbreviations, their potential problems, and the preferred terms:

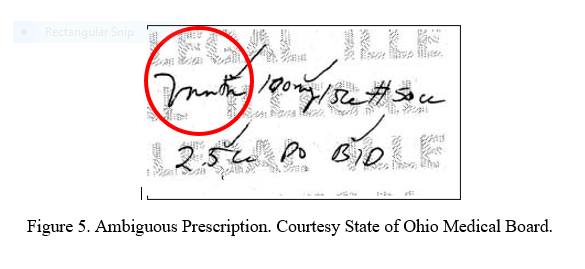

Compounding the problem, hand-written prescriptions are often scrawled in a rushed and nearly indiscernible manner. Most people have received a written prescription at least once in their life and have probably asked the question, “How can anyone read this?” Even trained professionals may have difficulty interpreting a written order, such as the one depicted in Figure 5.

In this case, the dispensing pharmacists misread a prescription for Vantin® as Motrin®. Each pharmacist member of the Pharmacy Board in turn examined the written prescription and misread it in the same way (State of Ohio Medical Board, 2003). This case illuminates the complexity of this type of error because there is uncertainty in where the blame lies: The physician wrote what he meant to write, and the pharmacists unanimously agreed (albeit wrongly) on what it said. Because in their view there was no ambiguity, the pharmacists did not contact the physician for clarification. This case is also an excellent example of how an “error chain” can be initiated. In this scenario, two glaring questions come to mind: 1) With such scarce resources (i.e., people and time), is it necessary to form a committee for the sole purpose of translating a single word? 2) How difficult would it have been to take a few extra seconds to write legibly and prevent this medication error in the first place?

4. “A pharmacy technician trainee was working in the IV medication preparation room at the time”

Although some of the factors that may have contributed to the mislabeling of the “50 ml bottle of Diprivan®” as “Diflucan® 100 mg/50 mL” have been covered, it is interesting to think about how this specific error occurred. Mainly, the pharmacy technician’s working environment was conducive to error, similar-looking drug labels were being used, and the technician was a trainee. As previously stated, even the most experienced technicians are not immune to this type of error. When an unsupervised trainee works alone in this environment, the chance of unabated error greatly increases.

One of the key cognitive problems this technician may have faced was the see what you want to see syndrome. This problem is just as it sounds: People are apt to see something because they expect to see it. This lowering of perceptual discrimination may be due to complacency, boredom, fatigue, environmental conditions, lack of experience, lack of training, or any combination of these.

As an aviation trainer, the author has casually experimented with pilots in a simulator to observe the effects of this syndrome. The scenario was set up like this: Just before the crew initiated the before-landing checklist, the reading of a pressure gauge was dropped from 1500 to 0 psi. Part of the checklist included a check of this gauge to verify the pressure was “in the green” (normal range, about 1500 psi). Interestingly, when the crew came to this checklist item, approximately 80% of the pilots looked at the gauge and announced that the “pressure was in the green” even though the gauge indicated 0 psi and the annunciator light that signaled critically low pressure was illuminated. This scenario is analogous to mistakes that can occur during medical procedures, and a high amount of vigilance is required to avoid such errors.

5. “The nurse had been ‘yelled at’ the day before by another physician—she attributed her immediate and total diversion of attention in large part to her fear of a similar episode”

The final factor in this case study illuminates a deficiency in human performance precipitated by a mismatch in the L-L interface (see Figure 1 in part 1 of this article). Recall that the additional “L” was added to the SHELL model because people were interfacing not only with the environment, software, and hardware, but also with other people. Unfortunately, this interface is highly susceptible to errors, specifically errors in the communication process. In the case study, the nurse sensed that there was something amiss when a glass bottle containing an opaque liquid arrived instead of the plastic bag containing a clear solution that she expected. This was a good catch on her part, but unfortunately, she was not able to trap the error at that point because of a distraction that required immediate attention. When the nurse returned to the patient, she forgot that there was a discrepancy with the medication and proceeded to connect the bottle of Diprivan to the patient’s subclavian line. It was only after the IV pump alarmed due to air in the line that the nurse remembered that the wrong medication was being administered.

Although the nurse’s distraction caused this error chain to perpetuate, the most disturbing finding might be the nurse’s fear of being yelled at by a physician. Fear should not be part of a nurse’s job, as it will compound these types of errors. Instead, a synergistic environment needs to be adopted by all medical teams with effective and professional interaction, which is critical to patient safety. Unfortunately, there is a steep hierarchical structure that makes subordinates afraid to speak up or challenge the person in charge (the physician, surgeon, anesthesiologist, etc.). Rather than “making waves,” the subordinate will tend to go along with higher-level decisions because they fear repercussions from other staff, or worse, being labeled as a “complainer” and possibly losing their job.

Aviation has addressed, among other things, communication and group dynamics by mandating Crew Resource Management (CRM) training for all airline pilots. CRM empowers crew members to speak up and challenge the captain when an uncomfortable situation or “red flag” exists. This type of training is slowly gaining acceptance in medicine, but until that happens, these barriers will continue to exist. Physicians need to understand that their coworkers are some of their best resources, and the input from those coworkers can help mitigate errors and prevent patient harm.

One final word on this fifth factor of the case study: Even though memory was addressed earlier in this paper, it is worth speaking briefly about the nurse’s failure to remember that, before being distracted, she had intended to address the medication discrepancy. This is known as prospective memory, or remembering to do things in the future. In contrast to our strong retrospective memory (remembering things from the past), prospective memory is one of the weakest parts of human memory. This is one of the reasons why people tie ribbons on their fingers, use day planners, and write reminders on their hands.

In aviation, NASA has conducted research that addresses prospective memory in airline pilots. One such study conducted by Dismukes, Loukopoulos, and Jobe (2001) found that, in an informal analysis of 37 National Transportation Safety Board (NTSB) reports involving crew error, nearly half showed evidence of interruptions, distractions, or preoccupation with one task to the detriment of another. Some recommendations by Dismukes et al. include creating salient reminder cues, breaking concurrent tasks into subtasks and pausing between subtasks to monitor, and identifying specific things to monitor.

In another NASA study, Lang-Nowinski, Holbrook, and Dismukes (2003) found that out of a random sample of 1,299 reports from NASA’s anonymous Aviation Safety Reporting System (ASRS) database, 75 indicated memory errors by pilots. Of those 75, only one described an instance of retrospective memory failure—the remainder involved some form of prospective memory failure.

NASA’s memory research in aviation can be applied to any domain, including medicine. The nurse had to deal with a prospective task that was interrupted by a distraction, a significant precursor for error. The “fear factor” only made it more difficult for her to perform to her fullest potential.

Conclusion

This paper has discussed many of the cognitive factors that influence people in the medical profession. It was not meant to delve fully into all aspects of cognition, as that would require hundreds of pages. Instead, two human factors models were presented and a random case study was used to elucidate human factors error principles in the day-to-day activities of a typical hospital environment.

While aviation has addressed human factors and CRM as part of initial and recurrent training for airline pilots, healthcare has continued to work in a traditionally autonomous environment with little or no emphasis on human performance and group dynamics. That needs to change.

Bob Baron, PhD, is the president and chief consultant of The Aviation Consulting Group in Myrtle Beach, South Carolina. He has been involved in aviation since 1988, with extensive experience as a pilot, educator, and aviation safety advocate. Dr. Baron uses his many years of academic and practical experience to assist aviation organizations in their pursuit of safety excellence. His specializations include safety management systems and human factors.

References

Dismukes, R. K., Loukopoulos, L. D., & Jobe, K. K. (2001). The challenges of managing concurrent and deferred tasks. In R. Jensen (Ed.), Proceedings of the 11th International Symposium on Aviation Psychology. Columbus, OH: Ohio State University.

Federal Register. (2004). Bar Code Label Requirement for Human Drug Products and Biological Products. Retrieved March 20, 2020, from https://www.federalregister.gov/documents/2004/02/26/04-4249/bar-code-label-requirement-for-human-drug-products-and-biological-products.

Horrey, W. J., & Wickens, C. D. (2004). The impact of cell phone conversations on driving: A meta-analytic approach. University of Illinois at Urbana-Champaign. Retrieved from www.aviation.uiuc.edu/UnitsHFD/TechPdf/04-2.pdf

The Joint Commission. (2019). Official “Do Not Use” List. Retrieved March 20, 2020, from https://bit.ly/33AaAUN

Lang-Nowinski, J., Holbrook, J. B., & Dismukes, R. K. (2003). Human memory and cockpit operations: An ASRS study. NASA Ames Research Center. Retrieved from https://human-factors.arc.nasa.gov/flightcognition/Publications/Nowinski_etal_ISAP03.pdf

Medicare Payment Advisory Commission. (2003). Physician-administered drugs: Distribution and payment issues in the private sector. Retrieved March 20, 2020, from https://bit.ly/2Qy6MON.

State of Ohio Medical Board. (2003). Illegible prescriptions and medication errors. Your Report From the State Medical Board of Ohio. Winter/Spring 2003. Retrieved March 20, 2020, from https://bit.ly/2Ww1Uxp

U.S. Pharmacopeia. (2002). USP releases new study on medication errors at U.S. hospitals.