Haptics for Touch-Enabled Simulation and Training

July / August 2008

![]()

Haptics for Touch-Enabled Simulation and Training

The science of haptics — artificial touch technology — is stepping out of the research environment and into commercial medical training applications.

Clinicians often think of simulations as taking place on mannequins so that staff members can practice repeatedly until they achieve mastery. Advanced technology, however, is allowing computers to power far more realistic, guided learning experiences than on static “dummies,” which will directly benefit clinicians and patients while still delivering key benefits of simulation, including the ability to avoid the use of cadavers, animals, and live-patient learning.

The science of haptics — artificial touch technology — is stepping out of the research environment and into commercial medical training applications. While joysticks in consumer games offer tactile perception (sensing vibrations), haptically-enabled medical simulations provide force feedback (sensing the resistance of pushing against virtual objects) and are based on sophisticated, high-fidelity haptic devices and software that deliver greater precision, differentiation between materials such as bone and soft tissue, and a more realistic sense of touch.

Haptics also is starting to make meaningful inroads in physical therapy applications that benefit patients and free up clinicians from being present at every session — they let the computer facilitate patient training and automatically keep on-going assessment records of performance and skill levels. A small study done in Sweden has already demonstrated that a touch-enabled rehabilitation device can promote upper body motor rehabilitation, and can help restore brain function (Broeren et al., 2004). Additionally, touch-enabled simulations hold the potential for physician recertification.

Learning by Feel

Haptics belongs to the category of virtual reality technologies that create an artificial environment. Mostly such experiences are visual — either seen on a standard computer screen or via stereoscopic displays worn by the user, as video gamers do. Today, sounds are often part of the experience, and increasingly, so are tactile experiences or “haptics.”

The haptics most frequently seen in medical simulation and training uses technology known as proprioceptive “force feedback,” where users hold a tool or stylus that pushes back on the user’s hand when it makes contact with virtual objects. The stylus is usually powered by a motor, or in a recent case, by magnetic levitation forces, and programmed by software to deliver specific levels of force or “feeling.” Such forces can be programmed to simulate the sensation of interacting with different organs by knowing the material properties of tissue, muscle, fat, open cavities, or more specific anatomical substructures.

Apprenticeship of surgical skills takes a great deal of time and mentoring, and unfortunately this learning process can sometimes lead to poor patient experiences. Alternative, effective methods for training surgeons are needed, and medical simulations are providing viable solutions. There is an increasing body of evidence that haptically-enabled training improves surgeon performance in the operating room, which in turn can save lives.

Traditional practice for teaching procedures to surgical residents is to “see one, do one, teach one” via clinician supervision with expensive bone specimens from cadavers or on real patients. Neither option facilitates thorough, risk-free training. Cadavers are costly and usually in short supply, with some countries (for example, the United Kingdom) banning them altogether. Patient-based learning — even under the highly supervised hospital settings of today’s medical centers — interjects an element of risk and malpractice concerns, particularly with high-risk procedures such as temporal bone drilling near the brain, where the slightest deviation can create widespread and permanent adverse consequences.

There are several opportunities for computer-based, haptically-enabled training simulators to beneficially impact medical education: guided lessons without the need for a real mentor present, simulating real patient anatomy and functions and the relevant anatomic variants, and automatic or batched assessment by the teacher. Medical students can practice a procedure repeatedly — an unlimited number of times — until they learn to “feel” how a procedure is done, and be skill-tested in a controlled, measurable environment. The touch component is key for imparting accuracy and confidence to students learning new procedures.

Haptically Enabled Surgical Training Improves OR Performance

Seymour et al. (2002) showed that haptically learned training skills do indeed transfer from a virtual-reality (VR) training system to the OR. Gallbladder dissection was 29% faster for residents who underwent haptically-enabled training on component tasks involved in the surgery. Residents trained the traditional way were nine times more likely to transiently fail to make progress, and five times more likely to injure the gallbladder or burn non-target tissue. The authors said they believe that their work sets the stage for more sophisticated uses of VR technologies in assessment, training, error reduction, and certification of surgeons.

The same realism can also be applied to the patient rehabilitation realm. Broeren et al. (2004) stated that virtual reality and haptics training can promote motor rehabilitation, demonstrating that playing a 3D computer game would improve upper extremity motor function. The haptic feedback experienced by the patient in the study was a key aspect of therapy; it trained the patient’s strength, grip, wrist rotation, and other hand movements. The author reported that the realism of the haptic feedback enhanced the game and therefore the study results.

State of Touch-Enabled Simulation

Several specialty areas have seen a surge in the availability of haptically enabled simulators, including arthroscopic surgery and temporal bone drilling. The reasons why simulators have been developed more quickly for some surgical specialties than others reflect the confluence of several factors: the move to improve physician training and patient safety for high-risk or difficult to learn procedures, the commitment of specialty practice boards, the interest of practicing MDs in applying computer technology to their domains, and commercial interest from startups and venture-capital investors.

There are also haptically enabled simulations for a broad range of procedures, such as:

- Training nurse anesthetists and anesthesiologists to perform epidurals — a procedure where a needle must be inserted through as many as 7 layers of tissue types before reaching the desired location to release the injection

- Explaining how to insert a newly-designed hip joint implant

- Petrous bone surgery

- Spinal surgery simulation and training

In many of these domains, the haptic or touch-enabled component provides a vital element for realistic training — without it, the training experience is rendered far less valuable. Blind procedures such as epidural injections are learned by feel, something that’s hard to do when cadavers are limited and practice on patients is too risky. Arthroscopic procedures involve moving in a very narrow area. Surgical drilling procedures can be challenging for surgeons-in-training to learn, particularly when they involve tiny, hard-to-view cavities such as the temporal bone in the inner ear.

The work of two firms — one in arthroscopic surgery simulation and the other in patient rehabilitation — demonstrate how teaching with a sense of touch significantly improves the training experience.

Arthroscopic Shoulder/Knee Surgery

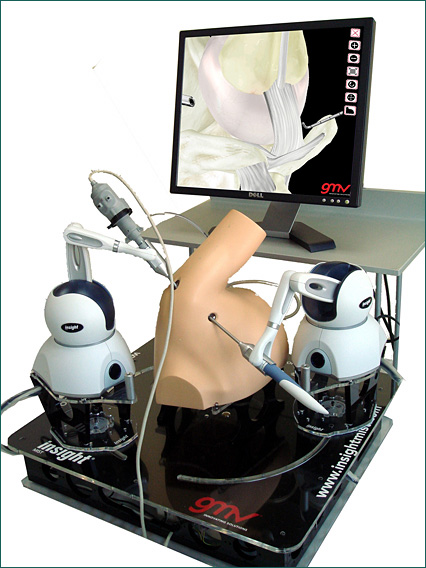

GMV provides the insightArthroVR® simulator for training physicians on key aspects of the arthroscopic surgery process. This simulator teaches surgeons essential skills of triangulation, camera orientation, and hand-eye coordination — all of which they “feel” in this haptically enabled system. Force feedback is synchronized with 3D visuals, but as simulation users have related, the element of touch is vital to the simulation’s effectiveness.

The system (Figure 1) consists of a base structure; a plastic model representing the anatomy of either the shoulder, knee joint, or both depending on which system is purchased; two off-the-shelf haptic devices by SensAble Technologies, which are used to mimic the arthroscope and probe to perform the virtual surgery; a computer monitor; and a computer that runs software developed by GMV containing 3D anatomical models and learning modules.

![]()

The insightArthroVR® is used for both knee and shoulder arthroscopy, and students use two haptic devices — one in each hand. In the case of knee arthroscopy, when the surgeon in training views a 3D image of the knee on screen, and performs a simulated procedure using the haptic stylus as his or her tool, the bone, cartilage, ligaments, and meniscus all “feel” different depending on the virtually touched anatomic structure. For instance, they must mark the entry points for femoral and tibial tunnels in the cavity, as part of learning the anterior cruciate ligament procedure, repeating the process until the “feeling” of it is mastered. In shoulder arthroscopy, they can feel the glenohumeral joint and the subacromial space, including bone, cartilage, labrum, ligaments, and tendons. GMV’s team used SensAble’s software toolkit to program the specific feedback so that the haptic devices could precisely deliver the correct forces to the student’s hands.

The haptic devices in the knee simulator enable the surgeon-in-training to orient the virtual arthroscope and the probe in the same positions that are required during the real surgery. For shoulder arthroscopy, the system also lets surgeons in training differentiate between the feeling of healthy anatomy and pathological conditions. For instance, the SLAP lesion refers to an insufficient insertion of the labrum (and biceps tendon) in the scapula. Students can check it by pushing the labrum with the probe. If the labrum moves in the biceps insertion and allows the probe to enter between them, it signifies a SLAP lesion. The simulator lets students learn the difference in the tissues by feeling it.

Arm/Hand Skill Rehabilitation for Stroke Victims

Curictus, a Swedish-based firm, applies haptics to stroke rehabilitation for patients in a Curictus Neurorehabilitation System that combines immersive computer game-style content, telemedicine, and skills assessment. Stroke victims often need extensive practice to retrain the muscles of the arm and hand to perform everyday tasks and to train the brain to restore its functionality. Additionally, this haptically-enabled computer application offers a capable “stand-in” to replace some personal sessions with a clinician.

In the Curictus Neurorehabilitation System (Figure 2), haptics provides the learning experience. The system includes a 3D stereographic monitor, a stylus-like haptic device mounted on a robotic arm, and a computer mounted under a table that runs the required software. Patients also wear special 3D glasses so they can see the 3D graphics.

![]()

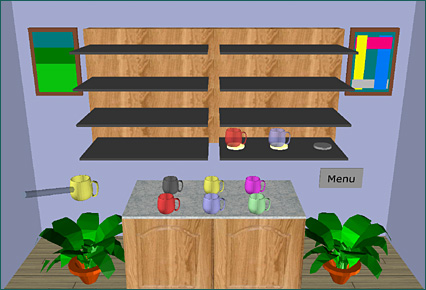

To make rehabilitation more fun and motivating, the clinician creates a custom menu from among 18 games and 4 assessments contained in the Curictus activity library. The games require the patient to perform reaching movements of the upper extremity, as dictated by the target (game element) locations in 3D space. Each game is programmed to provide the patient with varying degrees of “push back” or force feedback for a given task.

For example, in the game Mug Mastermind, where the objective is to find the correct color combination by placing mugs on a shelf, the room environment is haptically enabled (Figure 3). The weight of the mugs, the start and end locations of the mugs in the room, and the shelf positions can be customized according to the current patient’s abilities. General difficulty settings (easy, medium, difficult) are possible, and a therapist can make detailed adjustments to guide the patient’s recovery. The games were designed with the older stroke population’s needs in mind, including vision, hearing, touch/movement, attention, learning, memory, and everyday cognitive tasks.

![]()

“Our clinical studies with over 130 individuals to date — patients ranging from age 28 all the way up to 87 years old — have so far been very positive,” said Dr. Martin Rydmark, associate professor in anatomy at Mednet (the Medical Informatics & Computer Assisted Education Institute Department), Institution for Biomedicine, at the Sahlgrenska Academy at Göteborg University in Göteborg, Sweden. “New computing technologies like this are opening up new ways of stroke rehabilitation that impacts patients in a positive way, and clinics can save both time and money.”

Role in Certification and Training

In addition to training, there’s a role for touch-enabled simulation for ongoing certification.

“Technology providing a highly realistic sense of touch to medical simulation is paramount, especially for procedures like arthroscopy where discerning the feeling of different tissues is the crux of the learning process,” said Dr. Dilworth Cannon, arthroscopic surgeon and professor of clinical orthopaedic surgery at the University of California at San Francisco. Dr. Cannon also is chair of the American Academy of Orthopaedic Surgeons’ content development group, and former president of the Arthroscopy Association of North America.

He is leading a seven-city study in the U.S. this summer, funded by the American Academy of Orthopaedic Surgeons (AAOS), the Arthroscopy Association of North America (AANA), and American Board 0f Orthopaedic Surgery (ABOS) to evaluate haptic-based training for arthroscopic knee surgery compared to the typical medical school “see one, do one, teach one” approach. The study tests separate groups of post-graduate, year-3 (PGY3) residents in arthroscopic surgery — one group that learned via traditional residency training, and the other by a Virtual Reality Knee Arthroscopy Simulator from Touch of Life Technologies (ToLTech) of Aurora, Colorado. In an unrelated project taking place in Australia this summer, a temporal bone drilling simulator from Medic Vision Ltd. is also undergoing validation testing, and may present a similar opportunity for otolaryngologist certification.

“http://psqh.com/sepoct08/arthroscopic simulation has the potential to help prevent surgical errors that may leave patients crippled or with early arthritis, and in other surgical disciplines, simulation could prevent even worse outcomes,” Dr. Cannon said. For over a decade he has been involved in fostering the AAOS’s and AANA’s initiatives on touch-enabled simulation technologies. This summer’s effort will attempt to validate that the ToLTech simulator can teach a knee diagnostic arthroscopy procedure better than traditional observation and guided learning in the traditional medical school model.

While the AAOS’ interest in simulation is for training and educational uses, the American Board of Orthopaedic Surgeons is participating in the validation study with an interest in adding touch-enabled surgical simulation to their traditional didactic Board examinations. Training centers such as the Orthopedic Learning Center in Chicago also are watching the concept with interest. This Center delivers 2-day physician training courses almost every weekend throughout the year, and could benefit from the repeatability, consistency, and unlimited practice opportunities of touch-enabled computer-based simulation. Many of these centers rely on cadaver training. Yet each cadaver is different, has limited reusability, and with the average cadaver age being between 70 and 75 years, the joints are often too degenerated to allow a good surgical experience.

“Ideally we would like to see touch-enabled simulators in every arthroscopic surgery residency training program, and in addition, in clinical training settings to replace cadaver parts,” said Dr. Cannon. “The technology has advanced so far in the last few years that present and future advantages are just so compelling.”

David Chen is chief technology officer of SensAble Technologies Inc. He has led the development of software for transforming medical data into formats for visualization, surgical simulation, and biometric qualification. He also has done research in the areas of interactive computer graphics and animation, virtual environment techniques, robotic control of jointed figures, and force-based finite element models of skeletal muscle in the study of biomechanics. Dr. Chen can be reached at 781-937-8315 or info@sensable.com.

References

Broeren, J., Rydmark, M., & Sunnerhagen, K. S. (2004). Virtual reality and haptics as a training device for movement rehabilitation after stroke: A single-case study. Archives of Physical Medicine Rehabilitation, 85, 1247 — 1250.

Seymour, N. E., Gallagher, A. G., Rooman, S. A., O’Brien, M. K., Bansal, V. K., Andersen, D. K., & Satava, R. M. (2002, October). Virtual reality training improves operating room performance: Results of a randomized, double-blinded study. Annals of Surgery, 236(4), 458 — 464.