Simulation Techniques for Teaching Time-Outs: A Controlled Trial

Incorrect surgery and invasive procedures sometimes occur on the wrong patient, wrong side, or wrong site; are performed at the wrong level; use the wrong implant; or in some way represent a wrong procedure on the correct patient. Although rare, with a reported incidence of 1 in 112,994 cases, incorrect invasive procedures have potentially disastrous consequences for patients, staff, and healthcare organizations (Dillon, 2008). Patients suffer preventable harm, staff may be censured and emotionally traumatized, and healthcare organizations experience a loss of public reputation and trust.

In an effort to prevent incorrect invasive procedures, The Joint Commission mandates that teams follow the Universal Protocol prior to performing an invasive procedure (Dillon, 2008; Norton, 2007). The Universal Protocol includes the pre-procedure verification of patient identification and the procedure to be performed; confirmation of proper informed consent; marking of the procedure site; and performance of a purposeful “time-out.” Guided by a checklist and facilitated by two providers, time-outs in the form of a face-to-face discussion occur just prior to every invasive procedure performed. Time-out elements include proper verification of patient identity; informed consent; procedure; site and laterality; medical images; and confirmation of the site mark within the sterile field (Dillon, 2008). The facilitation of an effective time-out requires leadership, teamwork, and communication skills (Blanco, Clarke, & Martindell, 2009).

There are a number of challenges in the prevention of incorrect invasive procedures. The most egregious obstacle is failure to perform a time-out (Dillon, 2008). Other problems include ambivalent compliance, lack of engagement, distractions, mislabeled or wrong medical images, omitting one or more of the checklist elements, and a deficient patient safety culture. While the initial study of the WHO surgical safety checklist, which incorporated the time-out protocol, was associated with a decrease in morbidity and mortality (Haynes et al., 2009), the more recent Keystone Surgery Program, which utilized similar checklist-based interventions, failed to improve patient outcomes for a larger number of patients (Reames, Krell, Campbell, & Dimick, 2015).

A comprehensive strategy for organizationwide implementation of high-quality time-outs prior to invasive procedures includes education, training, and practice (Dillon, 2008; Kelly et al., 2011; Neily et al., 2009; Gottumukkala, Street, Fitzpatrick, Tatineny, & Duncan, 2012). Studies have demonstrated that high-fidelity simulation (HFS) is associated with improvements in the quality of time-outs; however, HFS is expensive and time-consuming, and it does not scale well. Furthermore, HFS requires learners, instructors, and equipment to be simultaneously present. Real-time HFS may not adequately address the challenge of training thousands of residents involved in invasive procedures and rotating through a large healthcare organization (Paull et al., 2013).

A comprehensive strategy for organizationwide implementation of high-quality time-outs prior to invasive procedures includes education, training, and practice (Dillon, 2008; Kelly et al., 2011; Neily et al., 2009; Gottumukkala, Street, Fitzpatrick, Tatineny, & Duncan, 2012). Studies have demonstrated that high-fidelity simulation (HFS) is associated with improvements in the quality of time-outs; however, HFS is expensive and time-consuming, and it does not scale well. Furthermore, HFS requires learners, instructors, and equipment to be simultaneously present. Real-time HFS may not adequately address the challenge of training thousands of residents involved in invasive procedures and rotating through a large healthcare organization (Paull et al., 2013).

A Web-based, online alternative to HFS is virtual patient simulation (VPS). VPS is a cost-effective, asynchronous learning platform capable of reaching an unlimited number of participants. The purpose of this study is to answer the following question: Among medical residents and faculty, how does face-to-face HFS versus online VPS impact learner confidence in the conduct of the Universal Protocol and time-outs prior to performing invasive procedures?

Methods

Design

Medical residents and faculty members attending a patient safety workshop received didactic training on the prevention of incorrect invasive procedures, the Universal Protocol, and time-outs. The morning of each workshop included: 1) an introduction to patient safety, 2) principles of human factors engineering, and 3) crew resource management (CRM) teamwork and communication tools and techniques. In the afternoon, learners were immersed in simulation scenarios.

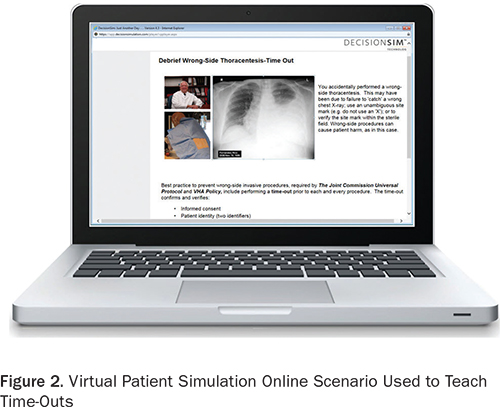

Workshop participants read an informational letter and subsequently underwent HFS (Figure 1) or VPS (Figure 2). A debriefing facilitated by an instructor followed each HFS. Feedback on performance was provided within the VPS by an online synthetic instructor. Participant confidence in learning outcomes was assessed with a survey tool. Data were then compared across the two interventions. The study (RO# 2011-110675) was approved by the Institutional Review Board on February 29, 2012.

Setting and participants

The study was conducted within the Veterans Health Administration (VHA). Subjects were recruited from five large, complex VHA medical centers in four states (VHA, 2010a; VHA, 2010b). Over 37,000 residents train in the VHA annually in 2,300 Accreditation Council for Graduate Medical Education-accredited programs (OAA, 2013). During orientation, medical residents receive training in the prevention of incorrect invasive procedures, with a focus on The Joint Commission Universal Protocol, time-outs, and VHA policy (VHA, 2013). The subjects in this study were medical residents and their faculty mentors. The residents were in their first to third year of training, rotating through a VA medical center, and attending a patient safety workshop. A total of 212 residents and faculty participated in the study.

Data collection

High-fidelity simulation (control group). The control group consisted of medical residents and faculty participating in a HFS scenario involving time-outs. Participants received a brief didactic course on the Universal Protocol and time-outs during the face-to-face patient safety workshop, followed by immersion in a HFS scenario where they conducted a time-out prior to a bedside thoracentesis (Paull et al., 2013). During the simulation, participants were challenged to manage distractions, detect incorrect medical images, and assemble and confirm critical resources including personnel, information, and equipment prior to performing the thoracentesis. An instructor-facilitated debriefing accompanied every HFS immersion. The HFS time-out scenario has been previously vetted by expert clinicians and is both realistic and effective in teaching time-outs. It is also associated with measurable improvements in participant self-reported confidence in conducting time-outs, and with increased compliance with the Universal Protocol and time-out policy. A given HFS consisted of four residents in the “hot seat,” one each in the roles of the resident performing the thoracentesis, the nurse, the certified nursing assistant, and the available attending.

Virtual patient simulation (experimental group). The experimental group was subjected to a new VPS online learning format for delivering simulation-based time-out training. Our organization deployed the DecisionSim (Kynectiv Inc.) software platform (Decision Simulation, 2013), which allows instructors to create VPS online simulation experiences for learners. The software scenes, or nodes, consist of several types: descriptive, inquiry, multiple-choice, branching, or open-ended questions or comments supplemented with multimedia. Learners were immersed in a patient care narrative and were tasked with gathering information, making decisions, and taking action while experiencing the consequences of their decisions within a simulated high-interference environment. Specific and immediate feedback, varying with the learner’s choices, was provided by an online synthetic instructor.

Data analysis

Outcome measurements. The independent variable controlled in the study was the type of educational format utilized to teach time-outs to medical residents, either HFS or VPS (Thompson & Panacek, 2007). The dependent variable measured in the study was the subject’s self-reported confidence in conducting time-outs. Participants at a patient safety workshop, divided into HFS and VPS groups, completed the questionnaire following their simulation experience.

Statistical analysis. Demographic data were analyzed and compared between the two groups to ensure equivalent baseline characteristics and included the subject’s level of training. Likert scale survey scores were contrasted amongst HFS and VPS groups, utilizing a two-tailed, unpaired Student t test (Norman & Streiner, 2003). The study had a power of 95% in detecting a 20% difference in the average (one-point change on the Likert scale) confidence level of learners between the HFS and VPS groups (DSS Research, 2013).

Results

Survey response rate

Among the 392 faculty and residents attending the five patient safety workshops, 212 completed a survey for a response rate of 54%.

Survey results—HFS vs. VPS

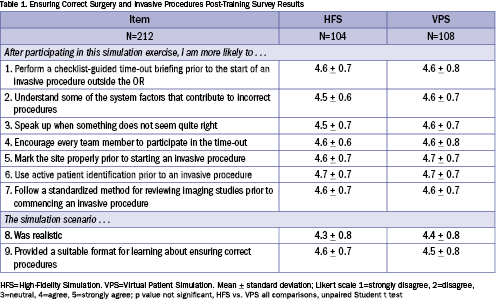

All participants. Survey results for all participants in both HFS and VPS are shown in Table 1. Participants rated their confidence in performing time-out elements highly following completion of either HFS or VPS. There was no significant statistical difference between HFS and VPS participants for the self-reported survey results concerning the performance of each time-out element and safety behavior. Overall, learners in both the HFS and VPS groups rated the simulation experience as realistic and suitable for practicing time-outs as an effective, standardized method for error trapping.

Residents. Survey results were analyzed for 168 participants identified as residents on the survey sheet, comparing HFS to VPS. There were no statistically significant differences in confidence levels for any of the survey elements between residents taking either the HFS or VPS curricula.

Faculty. Likewise, survey results for the 44 participants who identified themselves as faculty on the survey form were examined, again comparing the HFS and VPS groups. The only statistically significant difference was for item #2: “After participating in this simulation exercise, I am more likely to understand some of the system factors that contributed to incorrect procedures.” On the 1 to 5 Likert scale, strongly disagree to strongly agree, faculty completing HFS training rated their confidence level as 5.0 compared to 4.6 for VPS training (p=0.001, unpaired, Student t test).

Discussion

The Joint Commission has endorsed a standard for the use of simulation in assessing competencies: “The hospital uses assessment methods to determine the individual’s competence in the skills being assessed. Note: Methods may include … the use of simulation” (Joint Commission, 2012). The question then becomes which method of simulation is best for teaching the Universal Protocol, including time-outs. There are no previous studies examining the use of VPS for time-out teaching. In this current well-powered, controlled trial comparing two groups of learners undergoing time-out training—one participating in VPS and one participating in HFS—there was no significant difference in learning outcomes as measured by a previously validated Ensuring Correct Surgery (ECS) survey that gauged self-confidence in performing time-outs (Paull et al., 2013).

The success of VPS in teaching time-outs was not entirely an unexpected result in this study—VPS has been found beneficial in other areas of medical education. Botezatu, Hult, Tessima, & Fors (2010) found that medical students who trained with VPS in hematology and cardiology demonstrated subsequent superior scores for interviewing patients, ordering proper laboratory studies, arriving at the correct diagnosis, and implementing correct treatment. In this current study, for each element of the time-out process, learners rated their post-training self-confidence well above 4.0 on a 1 to 5 Likert scale. Taken together with the results of previous studies (Botezatu et al., 2010; Huwendiek et al., 2009; Kononowicz et al., 2012) that demonstrated positive VPS learning outcomes in medical education, the results of this study encourage further exploration of VPS as a potentially useful learning format for teaching patient safety. Despite the apparent equivalency between VPS and HFS for teaching time-outs as shown in the results of the ECS survey, the current study disclosed some important differences between the two learning formats.

Differences between HFS and VPS in teaching time-outs

Faculty rated HFS superior to VPS in response to the survey question “understand some of the system factors that contribute to incorrect procedures.” There are a number of possible reasons that HFS would be better at demonstrating the underlying, latent, systemic factors that can lead to an incorrect invasive procedure. Teamwork and communication issues are the root cause of 70% of preventable adverse events (Henkind & Sinnett, 2008). Simulation-based training utilizing a high-fidelity simulator allows teams to practice non-technical skills such as leadership, teamwork, and communication (Arora, Hull, Fitzpatrick, Sevdalis, & Birnbach, 2015).

As a team, members could catch others’ errors and alert the team. This was especially noticeable for catching the wrong chest x-ray image. HFS teams usually caught the error, whereas the VPS solo learner was more likely to initially miss the wrong x-ray. Since the time-out is inherently a team function, it makes sense that providers should train in teams. Technology limited our VPS scenario to a single-learner experience, replacing “live” team members with synthetic agents within the scenario. The use of team-based VPS formats, such as the “immersive learning environment” described by Taekman & Shelley (2010), may overcome this challenge.

There were also debriefing differences between HFS and VPS in the current study that could account for the faculty’s perception of better understanding of systems issues with the HFS format. Each HFS was accompanied by an immediate debriefing of the simulation experience facilitated by experienced instructors, which occurred prior to the self-assessment survey. Human factors issues such as distractions (phone call during time-out) or automation surprises (wrong x-ray in electronic health record) were thoroughly discussed with learners. These HFS debriefings were dynamic conversations and included active listening. The VPS debriefing was more of a one-way conversation, provided by an on-screen synthetic instructor. Nonetheless, VPS may promote valuable self-reflection—meta-cognition that contributes to developing the skill of error detection in one’s own thinking.

These differences between HFS and VPS become magnified in light of literature suggesting that feedback and debriefing are the most important features of simulation-based medical education and that learners’ overall satisfaction with simulation is dependent on faculty demeanor (Cantrell, 2008; Fanning & Gaba, 2007). The live instructor following HFS creates a learning model where the facilitator is a co-learner. Open-ended questions and genuinely expressed positive reinforcement are more likely in this model. Leader and peer support for training positively affect the degree to which training is transferred into daily practice, a feature present within the HFS, but not the VPS arm of the study (Weaver, Salas, & King, 2011).

Study limitations

The current study has a number of limitations, including the lack of randomization of participants, absence of an evaluation of competence between study groups, and deficiency of a cost-benefit comparison between the two types of simulation for teaching time-outs. Self-selection of training methodology by study participants remains an important study limitation. The costs of either HFS or VPS can be compared to the costs of an incorrect surgery or invasive procedure. Patient safety violations are expensive, involving direct patient care, legal, accreditation, and marketing costs (Weeks & Bagian, 2003). While VPS has considerable start-up costs in terms of time spent creating a scenario, the online experience requires no special maintenance or instructor oversight. Our overall impression is that, due to reduced incremental costs over a longer period of time, VPS is more economical than HFS. Our high-fidelity simulator cost $100,000, and the instructors’ time for each one-hour HFS is estimated at $75 (D. Paull, personal communication, November 23, 2015). The cost for teaching 1,000 residents to perform time-outs using HFS would be $175 per learner. The current VPS scenario required 246 hours to build, at $75/hour, for a total of $18,450, or $18.45 per learner for those same 1,000 participants, a 10-fold savings.

Conclusion

The starting place for learners varies, including for patient safety and time-outs. Some clinicians function well as part of teams and seem to innately communicate effectively without regard to social and cultural barriers. The data for medical errors and resulting patient harm indicate that the majority of us are not so skilled. The final protective barrier for patients is formed of clinicians who remain aware that the healthcare system cannot yet be considered highly reliable, and who freely express concerns about potential vulnerabilities that could lead to harm. Providing a VPS experience that reveals the consequences of taking shortcuts in bottom-line procedures such as time-outs will contribute to patient safety—as well as to professional and personal growth among trainees. However, HFS provides a more robust, experiential place in which to practice and debrief the teamwork and communication skills necessary to operationalize patient safety policies and procedures in the clinical workplace.

Douglas Paull is the director of patient safety curriculum and medical simulation at the VA National Center for Patient Safety.

Linda Williams is a program specialist at the VA National Center for Patient Safety.

David Sine is the chief risk officer at the VA National Center for Patient Safety.

This material is based upon work supported by the Department of Veterans Affairs, the Veterans Health Administration, and the National Center for Patient Safety. The contents of this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or the United States Government.

References

Arora, S., Hull, L., Fitzpatrick, M., Sevdalis, N., & Birnbach, D. J. (2015). Crisis management on surgical wards. A simulation-based approach to enhancing technical, teamwork, and patient interaction skills. Annals of Surgery, 261, 888–893.

Blanco, M., Clarke, J. R., & Martindell, D. (2009). Wrong site surgery near misses and actual occurrences. AORN Journal, 90, 215–222.

Botezatu, M., Hult, H., Tessma, M. K., & Fors, U. (2010). Virtual patient simulation: Knowledge gain or knowledge loss? Medical Teacher, 32, 562–568.

Cantrell, M. A. (2008). The importance of debriefing in clinical simulations. Clinical Simulation in Nursing, 4, e19–e23.

Decision Simulation (2013). Virtual patient platform. Retrieved from http://decisionsimulation.com/decisionsim/

Dillon, K. A. (2008). Time out: An analysis. AORN Journal, 88, 437–442.

DSS Research (2013). Statistical power calculators. Retrieved from https://www.dssresearch.com/KnowledgeCenter/toolkitcalculators/statisticalpowercalculators.aspx

Fanning, R., & Gaba, D. M. (2007). The role of debriefing in simulation-based learning. Simulation in Healthcare, 2, 115–125.

Gottumukkala, R., Street, M., Fitzpatrick, M., Tatineny, P., & Duncan, J. R. (2012). Improving team performance during pre-procedure time-out in pediatric interventional radiology. Joint Commission Journal on Quality and Patient Safety, 38, 387–394.

Haynes, A. B., Weiser, T. G., Berry, W. R., Lipsitz, S. R., Breizat, A. S., Dellinger, E. P., … Gawande, A. A. (2009). A surgical safety checklist to reduce morbidity and mortality in a global population. New England Journal of Medicine, 360, 491–499.

Henkind, S. J., & Sinnett, C. (2008). Patient care, square-rigger sailing, and safety. Journal of the American Medical Association, 300, 1691–1693.

Huwendiek, S., Reichert, F., Bosse, H., de Leng, B., van der Vleuten, C., Haag, M., … Tonshoff, B. (2009). Design principles for virtual patients: A focus group study among students. Medical Education, 43, 580–588.

Joint Commission. (2012). Hospital Accreditation Standards. Oakbrook Terrace, IL: Author.

Kelly, J. J., Farley, H., O’Cain, C., Broida, R. I., Klauer, K., Fuller, D. C., … Pines, J. M. (2011). A survey of the use of time-out protocols in emergency medicine. Joint Commission Journal on Quality and Patient Safety, 37, 285–288.

Kononowicz, A. A., Krawczyk, P., Cebula, G., Dembkowska, M., Drab, E., Fraczek, B., … Andres, J. (2012). Effects of introducing a voluntary virtual patient module to a basic life support with an automated external defibrillator course: A randomized trial. BMC Medical Education, 12, 41.

Moreno-Ger, P., Torrente, J., Bustamante, J., Fernandez-Galaz, C., Fernandez-Manjon, B., & Comas-Rengifo, M. D. (2010). Application of a low-cost web-based simulation to improve students’ practical skills in medical education. International Journal of Medical Informatics, 79, 459–467.

Neily, J., Mills, P. D., Eldridge, N., Dunn, E. J., Samples, C., Turner, J. R., … Bagian, J. P. (2009). Incorrect surgical procedures within and outside of the operating room. Archives of Surgery, 144, 1028–1034.

Norman, G. R., & Streiner, D. L. (2003). Pretty Darned Quick Statistics. Shelton, CT: People’s Medical Publishing.

Norton, E. (2007). Implementing the universal protocol hospital-wide. AORN Journal, 85, 1187–1197.

OAA (Office of Academic Affiliations) (2013). Medical and dental education program. Retrieved from http://www.va.gov/oaa/gme_default.asp

Paull, D. E., Okuda, Y., Nudell, T., Mazzia, L. M., DeLeeuw, L., Mitchell, C., … Gunnar, W. (2013). Preventing wrong-site invasive procedures outside the operating room: A thoracentesis simulation case scenario. Simulation in Healthcare, 8, 52–60.

Reames, B. N., Krell, R. W., Campbell, D. A., & Dimick, J. B. (2015). A checklist-based intervention to improve surgical outcomes in Michigan. Evaluation of the Keystone Surgery Program. JAMA Surgery, 150(3), 208–215.

Rosen, M. A., Salas, E., Wilson, K. A., King, H. B., Salisbury, M., Augenstein, J. S., … Birnbach, D. J. (2008). Measuring team performance in simulation-based training: Adopting best practices for healthcare. Simulation in Healthcare, 3, 33–41.

Taekman, J. M., & Shelley, K. (2010). Virtual environments in healthcare: Immersion, disruption, and flow. International Anesthesiology Clinics, 48(3), 101–121.

Thompson, C. B., & Panacek, E. A. (2007). Sources of bias in research design. Air Medical Journal, 26, 166–168.

Triola, M., Feldman, H., Kalet, A. L., Zabar, S., Kachur, E. K., Gillespie, C., … Lipkin, M. (2006). A randomized trial of teaching clinical skills using virtual and live standardized patients. Journal of General Internal Medicine, 21, 424–429.

VHA (2010a). Facility quality and safety report. Retrieved from http://www.va.gov/health/docs/HospitalReportCard2010.pdf

VHA (2010b). Locations. Veterans Integrated Service Networks (VISN). Retrieved from http://www.va.gov/directory/guide/division.asp?dnum=1

VHA (2013). Ensuring correct surgery and invasive procedures. Retrieved from http://www.va.gov/vhapublications/ViewPublication.asp?pub_ID=2922

Weaver, S. J., Salas, E., & King, H. B. (2011). Twelve best practices for team training evaluation in healthcare. Joint Commission Journal on Quality and Patient Safety, 37, 341–349.

Weeks, W. B., & Bagian, J. P. (2003). Making the business case for patient safety. Joint Commission Journal on Quality and Patient Safety, 29, 51–54.