A System-Based Approach to Managing Patient Safety in Ambulatory Care (and Beyond)

For years, providers of all backgrounds have recognized the need for a systematic approach to supporting safe and effective care for patients in the home and community.

By Charles Burger, MD; Paula Eaton; Kalie Hess, MPH; Ashley Mills, MHA; Maureen O’Connor, MA; Darcy Shargo, MFA; Caroline Zimmerman, MPP; Sky Vargas, MBA; and Jeff Brown, MEd

Understanding and improving inpatient and ambulatory patient safety requires the adoption, implementation, and refinement of models and methods for system-based safety management that have had limited traction in healthcare. This article proposes that 1) the sociotechnical system model advocated by the Institute of Medicine (now the National Academy of Medicine) responds to this need and is salient to any care context, including primary and specialty care, hospital, home, and community settings; and 2) the systems development discipline of cognitive systems engineering, which focuses on sociotechnical systems, provides methodology for the analysis and improvement of patient safety and efficacy of care, irrespective of setting.

Case: Evelyn’s fall

Evelyn1 was a 72-year-old woman with diabetes and heart disease. She lived alone, 10 miles from the nearest town and primary care practice. She relied on an older cousin for transportation to the grocery store and appointments with her primary care provider.

Following a cardiac event, Evelyn was hospitalized and her doctor increased her warfarin dosage during her stay. Upon discharge, she received a prescription for the new dosage, but the local pharmacy did not have pills in stock at the dosage prescribed. The pharmacist filled the prescription with pills of lesser strength, advising Evelyn to take three pills at the prescribed interval to meet the required dosage. Evelyn successfully followed these instructions, and when she refilled the prescription, she continued to take three pills. Within a week of the refill, she began to express that she felt “really tired,” and soon afterward, she started coughing blood. A smoker since her early teens, she told her cousin that she thought she had lung cancer and was afraid to go to the doctor for fear of confirming this. She canceled her next office visit, which was a routine check to determine if her warfarin prescription needed adjustment. Her cousin asked her repeatedly to make another appointment, to no avail. Within a week of canceling her office visit, Evelyn’s cousin found her dead in her home, evidently after striking her head against her wood stove during a fall. Her cousin said that in the week leading up to her death, she had been increasingly light-headed.

The foregoing case might be viewed as a tragic, yet not surprising outcome for a chronically ill and elderly person living alone, intrinsically at risk of falling. However, Evelyn’s fall might have been prevented. A medication review revealed that when the pharmacy refilled her warfarin prescription, each pill now contained the total dosage prescribed. By following earlier instructions to take three pills, Evelyn had inadvertently taken three times more than prescribed. Warfarin overdose can result in internal bleeding, which can present as coughing blood and lightheadedness. Evelyn’s medical history documents poor comprehension of written and verbal instruction, and poor vision due to diabetic retinopathy. The pharmacist didn’t have access to these notes, and Evelyn declined pharmacist counsel when she received her prescription. Evelyn likely did not read or did not comprehend the new prescription instructions.

Evelyn’s case illustrates that iatrogenic harm in ambulatory care may exhibit a period of slow worsening that is either undetected or not recognized as being linked to the patient’s treatment or therapy. The conditions that gradually emerged to provoke Evelyn’s fatal accident stemmed from impediments to communication and coordination among the organizations that supported her care, the existence of environmental hazards in the home, the absence of medication management support in the home, and Evelyn’s cognitive and physical limitations, among other factors. Neither Evelyn, nor anyone in her family, pharmacy, hospital, or primary care office, had sufficient systematic support for detecting her mistake and intervening in her worsening and ultimately fatal situation.

For years, providers of all backgrounds have recognized the need for a systematic approach to supporting safe and effective care for patients in the home and community. Until recently, there has been meager financial incentive to drive the inter-organizational collaboration required to address these needs. Risk-based payment models established by government and commercial payers are designed to incent better management of chronic conditions and avoidance of acute illness (Burwell, 2015). These “carrot and stick” payment models have catalyzed efforts throughout the United States to improve management of patients with chronic conditions. Yet, although effective management of ambulatory patient safety is integral to these efforts, approaches to detecting, identifying, analyzing, and mitigating iatrogenic harm in home and community-based care remain woefully underdeveloped (Shekelle et al., 2016; Vincent & Amalberti, 2016).

Do models and methods for managing patient safety in hospitals apply to ambulatory patient safety?

Patient safety researchers have predominantly focused on accidental patient injury and death in hospital settings (Shekelle et al., 2016). In this context, patient safety activities often focus on improving publicly reported measures specified by payers, accrediting organizations, and regulators. Although an organization may perform well on these measures, this approach will not necessarily yield insight into the underlying conditions for failure and harm that may manifest in unmonitored ways. Had Evelyn bled to death in her recliner, her death might have been attributed to “medication error” instead of a “fall.” Both classifications reflect surface-level manifestations of shared contributing factors—they are “phenotypes,” variable expressions of underlying conditions for harm in the context of care.2

Patient safety improvement efforts focus, inordinately, on a rising number of such phenotypic measures that may distract us from investigating and addressing underlying conditions for failure that may yield harm in other ways. If we misunderstand safety management to be a matter of improving performance on a growing number of narrowly defined safety measures, we may be oblivious to conditions for failure that are untouched by our approach to improvement—a consequence of managing measures rather than risk and safety (Hollnagel, 2014). This is an important concept when we consider why improvement of patient safety in the inpatient context has been slow (Dixon-Woods & Pronovost, 2016). The following is an excerpt from an interview with a bedside nurse, collected as part of a study to develop design requirements for a clinical decision aid.3 The situation she described illustrates how unsafe conditions may emerge, persist, and evolve unchecked—compelling frontline personnel to develop “workarounds” in an effort to meet patients’ needs.

Case: Medication mayhem

Our medical center implemented a new computer system for ordering medications last year. At the same time, a policy was implemented that prohibits us from making medication requests by phone or on paper forms using the pneumatic tube—neither of which were ideal, but they worked. Our patients have serious burns over much of their bodies. It is important that they be kept sedated, and we use a ketamine solution for that—we don’t want them to experience pain. But the new computer system is so clunky—each pharmacist is assigned three to four ICUs, and when I put in the order for more ketamine, she will not see it on her computer screen until she happens to click on the tab for my unit. She gets no alert or flag when an order comes—she just has to remember to keep looking at each ICU’s screen in the midst of everything else she is doing. When she does see the order, she has to write it down and then manually re-enter the order into another computer system, at a different workstation, and then she has to do all the required pharmacy checks so that it can be filled. We started to have patients come out of sedation because the orders weren’t being filled on time—the pharmacist might be juggling six or more stat orders and a bunch of more routine re-orders at any given time. As nurses began to worry about where their meds were, they would call the pharmacy, interrupting an already busy and interrupted situation for the pharmacist. We started making reminder requests [from the ICU] at least two hours in advance and then following up with regular phone calls, but the only way to make sure our patients didn’t come out of sedation was to violate another hospital policy. We aren’t supposed to mix the ketamine into solution at the bedside, because it could harm the patient if we get the concentration wrong. But we couldn’t get the hospital to fix the problem with medication ordering; they just wrote another policy requiring a timed response from the pharmacist, beginning when the order was requested. This didn’t correct any of the computer and workflow issues—the things that bogged the pharmacy down, and us. So, we started mixing ketamine at the bedside—having things ready in case the pharmacy didn’t get the solution to us in time. We could be fired for this, but following policy means the patient can suffer horribly.

The hospital’s introduction of a new computer program for medication ordering precipitated significant disruption of workflow in the pharmacy and (hence) the ICUs it served. As delay in pharmacy order fulfillment increased, so did the number of phone calls and other communication to the pharmacy from the floors, as nurses sought the whereabouts of the medications their patients urgently needed. The pharmacists’ work was continually interrupted under these conditions, not only delaying order fulfillment and patient care, but exacerbating opportunity for errors in medication preparation (Connor et al., 2016; Grundgeiger et al., 2016). Although staff reported the problems with medication ordering to the information technology department and quality improvement department, the reports were not adequately investigated in the context of the work settings involved. A policy requiring timely fulfillment of medication orders did not resolve the conditions that undermined reliable performance. Given that the problem did not resolve, nursing staff rapidly normalized the risk of mixing ketamine at the bedside to ensure that burn patients would not suffer loss of sedation; a risky workaround became standard practice.

Workarounds as a signal of risk

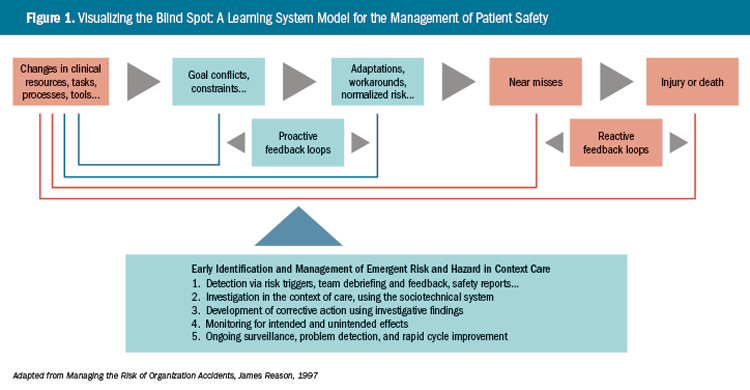

Ample research has shown that workarounds may signal the presence of unsafe or error-provoking conditions (Brown, 2005; Dekker, 2011). These may stem from local changes to technology, staffing, processes, work environment, or work organization. Similarly, changes within units of the same organization, such as a pharmacy, or even within external entities, may have unanticipated effects on risk and safety in the units they interact with. Workarounds may be rapidly normalized if the conditions that provoke them are not effectively detected, investigated, and resolved; they

may thus remain hidden in plainsight until they yield injury or death (Figure 1).4

As illustrated in Figure 1, proactive detection, investigation, and intervention in failure-provoking conditions requires continual surveillance for signs of change in practice or performance, and the potential for those changes to trigger patient or provider harm. Seemingly innocuous changes may produce unanticipated and unsafe conditions—even though the change that initiated trouble may have occurred in a separate work unit, or perhaps within another institution. Today’s improvement (e.g., a new pharmacy computer application) may quickly catalyze tomorrow’s clinical calamity (burn patients coming out of sedation on the ICU) and policy violation (nurses mixing ketamine solution at the bedside).

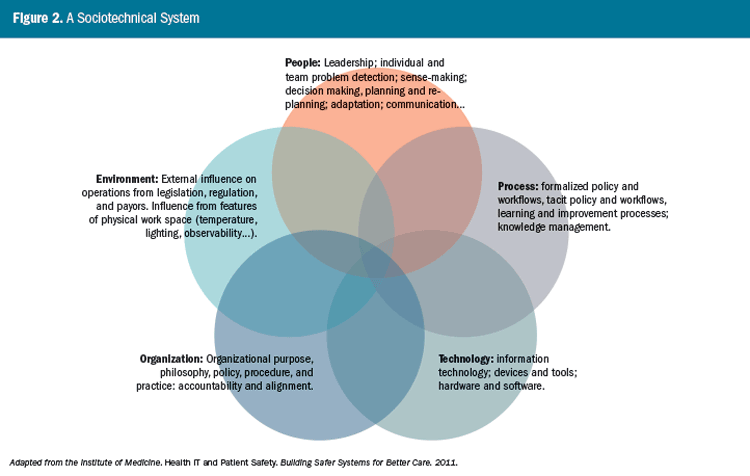

Differing states of development, common need

Unanticipated effects of change—side effects—that cross time and geography are characteristic of system behavior, as seen in both our ambulatory and inpatient cases. In any care setting, the interactions among people, environment, technologies, organization, and processes shape overall performance and the prospect of achieving intended outcomes. Yet system-based approaches to managing patient safety are underused. In an effort to advance such approaches, the Institute of Medicine (now the National Academy of Medicine) proposed a sociotechnical system (STS) model (Figure 2) to illustrate how interactions among the components of socially and technologically complex systems shape outcomes (Institute of Medicine, 2011). Although the STS model is a static representation of system dynamics, it is intended to show that adverse events stem from the unanticipated effects of interactions among system components in the context of care.

Any context of care—whether the home, hospital, or provider office—will have the five system components identified in the STS model: people, process, technology, environment, and organization.5 Proactive assessment of risk in any clinical setting requires that we identify how these components interact and how they shape performance. Similarly, in adverse event investigation, it is necessary to seek a retrospective understanding of the interactions among system components, in the context of the event. A key goal of investigation is to tell the story of the situation as it evolved, along a timeline leading to injury or death, and any subsequent recovery efforts that may have been attempted; to understand and describe the event by eliciting the experience of those involved (Dekker, 2014; Cook, Woods, & Miller, 1998).

Cognitive systems engineering (CSE)

To apply the sociotechnical system model for the improvement of patient safety, methods are needed that illuminate how performance is shaped dynamically, through the interaction among system components—bearing in mind that the human is the system’s most adaptive component. Increasingly, methods from a specialty discipline of systems engineering, called cognitive systems engineering (CSE), have been brought to bear in healthcare for this purpose (Bisantz, Burns, & Fairbanks, 2014). Uniting the National Academy of Medicine’s (NAM) STS model with CSE methods provides patient safety professionals with a foundational framework and approach to system-based safety management.

CSE methods have evolved over the past 40 years to describe and integrate human capability into socially and technologically complex systems. CSE professionals strive for optimal integration of human capabilities to improve system functionality, reliability, and safety. The term “cognitive” in cognitive systems engineering reflects the focus of this discipline on effectively supporting and using human cognitive capabilities such as problem detection, decision-making, coordinating, managing, and adapting (Patterson & Miller, 2012).

The safety and quality of healthcare is tightly coupled with the human capacity to perceive a problem, make sense of what is happening, and intervene to avert or mitigate harm (Woods & Branlat, 2011). In Evelyn’s case, there was insufficient systematic support for anyone involved in her care to detect and intervene in her self-dosing error. In the burn ICU case, a problem was detected and reported, but not adequately analyzed and addressed. These are common findings when investigating adverse events in sociotechnical systems—regardless of industry or domain. Safe and effective system performance hinges on the human capacity to detect problems, analyze them, and adapt activity and action in time to ensure safety, or to mitigate and recover from adverse events (Klein, 2013).

Despite ample research that implicates flawed systems in cases of accidental patient injury and death, it remains stunningly common in healthcare to blame those closest to a patient safety event for its occurrence. In hindsight, we could choose to fault Evelyn for her death, her pharmacist, her primary care physician, or even her cousin. However, this orientation is cruel and inappropriate, and it gets us nowhere in our efforts to reduce accidental patient injury and death. A more appropriate response is to focus on developing approaches to improving support for the human capabilities that drive the adaptive capacity of the system; the ability to recognize something is awry and to avert, or mitigate and recover from, unsafe situations. Although it can be difficult in practice, improvement of patient safety ultimately requires a commitment to identifying, analyzing, and intervening in emerging risk factors, wherever they originate—in the immediate environment or in a distant organization.

As illustrated by our two cases, care in the home and community poses safety monitoring and problem detection challenges that differ from inpatient care, where monitoring and observation by professional caregivers is, at least theoretically, more likely. However, both ambulatory and inpatient care are undertaken within a sociotechnical context. The methods of cognitive systems engineering provide an approach to improving risk mitigation and safety management, regardless of setting. Although CSE methods are not a panacea for the improvement of patient safety, and the NAM sociotechnical system model is not the only one in existence, together—coupled with principles of good system design—they provide the rudiments needed to explore how the interactions of system components are influencing risk and safety, whatever the context of care (Bahill & Botta, 2008).

Resources

Numerous publications that describe CSE methods and their application are cited in this article. Observation and interviewing skills are foundational to CSE analytic and design frameworks, and a growing number of publications provide guidance for those who wish to develop these capabilities (Agency for Healthcare Research and Quality, n.d.; Crandall, Klein, & Hoffman, 2006; Klein, 2008). Among these resources is an Agency for Healthcare Research and Quality publication focused on the value of cognitive task analysis for the improvement of patient-centered medical homes, whose existence is a sign that the methodological tools of CSE are becoming more mainstream (Potworowski & Green, 2013).

The “system of interest” for patient safety improvement is ultimately defined by the patient’s interactions with providers, and the communication in support of that patient’s care among professionals, patients, and lay caregivers. Although hospital-based patient interactions with clinicians are typically of short duration, the ambulatory patient’s face-to-face engagement with primary care providers is even more fleeting. Patients’ experience of healthcare occurs mostly beyond the walls of inpatient and outpatient facilities and is shaped extensively within the home and community, where social determinants of health can contribute powerfully to the risk of iatrogenic harm. A focus on ambulatory patient safety is overdue, and CSE offers a valuable approach for investigating, analyzing, and improving ambulatory patient safety without being limited to a specific setting; this system-based approach to improvement applies wherever the patient’s path may lead us.

About the Authors:

The authors’ professional foci include internal medicine, human factors and cognitive engineering, practice management, performance improvement, primary care integration, health information technology, public health, health policy, and social determinants of quality and safety in home and community-based care. All but Caroline Zimmerman provide technical assistance to Maine’s Community Health Centers for the Maine Primary Care Association in Augusta. Zimmerman’s affiliation is with MaineHealth in Portland.

Endnotes:

1 This case reflects actual patient safety events involving warfarin, but Evelyn is a fictional character.

2 Seemingly disparate patient safety events may have common origins, just as a litter of kittens may vary in fur patterns and colors, yet come from the same parents.

3 Brown, Jeff. Field notes from a cognitive work analysis study in a major medical center.

4 This medical center had banners at campus entrances advertising its hospital safety score of “A,” awarded by an external entity. This “safety grade” was based on the facility’s performance on reported measures. The existence of myriad unsafe/failure-provoking conditions in each of the clinical settings investigated during this study was unmeasured, normalized in practice, and invisible to hospital leaders and the outside world.

5 The Institute of Medicine used the term “external environment” rather than simply “environment,” as used in Figure 2. We use the term “environment” to accommodate both factors in the local environment and influences on local performance from external environments.

REFERENCES

Agency for Healthcare Research and Quality (n.d.). Critical decision method. Retrieved from https://healthit.ahrq.gov/health-it-tools-and-resources/evaluation-resources/workflow-assessment-health-it-toolkit/all-workflow-tools/critical-decision-method

Bahill, A. T., & Botta, R. (2008). Fundamental principles of good system design. Engineering Management Journal, 20(4), 9–17.

Bisantz, A. M., Burns, C. M., & Fairbanks, R. J. (Eds.). (2014). Cognitive systems engineering in health care. CRC Press.

Brown, J. P. (2005). Key themes in healthcare safety dilemmas. In M. Patankar, J. P. Brown, and M. D. Treadwell, Safety ethics. Cases from aviation, healthcare, and occupational and environmental health. Farnham, UK: Ashgate Publishing, Ltd.

Burwell, S. M. (2015). Setting value-based payment goals—HHS efforts to improve U.S. health care. N Engl J Med, 372(10), 897–899.

Connor, J. A., Ahern, J. P., Cuccovia, B., Porter, C. L., Arnold, A., Dionne, R. E., & Hickey, P. A. (2016). Implementing a distraction-free practice with the Red Zone Medication Safety initiative. Dimens Crit Care Nurs, 35(3), 116–124.

Cook, R. I., Woods, D. D., & Miller, C. (1998). A tale of two stories: Contrasting views of patient safety. Chicago: National Patient Safety Foundation.

Crandall, B., Klein, G. A., & Hoffman, R. R. (2006). Working minds: A practitioner’s guide to cognitive task analysis. MIT Press.

Dekker, S. (2011). Drift into failure: From hunting broken components to understanding complex systems. Farnham, UK: Ashgate Publishing, Ltd.

Dekker, S. (2014). The field guide to understanding ‘human error’. Farnham, UK: Ashgate Publishing, Ltd.

Dixon-Woods, M., & Pronovost, P. J. (2016). Patient safety and the problem of many hands. BMJ Qual Saf, 25(7), 485–488.

Grundgeiger, T., Dekker, S., Sanderson, P., Brecknell, B., Liu, D., & Aitken, L. M. (2016). Obstacles to research on the effects of interruptions in healthcare. BMJ Qual Saf, 25(6), 392–395.

Hollnagel, E. (2014). Safety-I and safety-II: The past and future of safety management. Farnham, UK: Ashgate Publishing, Ltd.

Institute of Medicine (2011, November). Health IT and patient safety: Building safer systems for better care. Washington, DC: The National Academies Press.

Klein, G. (2008). Naturalistic decision making. Human Factors, 50(3), 456–460.

Klein, G. (2013). Seeing what others don’t: The remarkable ways we gain insights. PublicAffairs.

Patterson, E. S., & Miller, J. E. (Eds.). (2012). Macrocognition metrics and scenarios: Design and evaluation for real-world teams. CRC Press.

Potworowski, G., & Green, L. A. (2013, February). Cognitive task analysis: Methods to improve patient-centered medical home models by understanding and leveraging its knowledge work. Rockville, MD: Agency for Healthcare Research and Quality. AHRQ Publication No. 13-0023-EF.

Shekelle, P. G., Sarkar, U., Shojania, K., Wachter, R. M., McDonald, K., Motala, A. … Shanman, R. (2016, October). Patient safety in ambulatory settings. Technical Briefs, No. 27. (Prepared by the Southern California Evidence-Based Practice Center under Contract No. 290-2015-00010-I.) AHRQ Publication No. 16-EHC033-EF. Rockville, MD: Agency for Healthcare Research and Quality. https://psnet.ahrq.gov/resources/resource/30459

Vincent, C., & Amalberti, R. (2016). Safer healthcare: Strategies for the real world. Springer International Publishing.

Woods, D. D., & Branlat, M. (2011). Basic patterns in how adaptive systems fail. In E. Hollnagel, J. Pariès, D. D. Woods, & J. Wreathall (Eds.), Resilience engineering in practice: A guidebook (p. 127–144). CRC Press.